Latency Management System Design

Planning a management group design.

Latency management system design. 16 minutes to read. In computer networking latency is an expression of how much time it takes for a data packet to travel from one designated point to another. Ideally latency will be as close to zero as possible. If the latency in a pipe is low and the bandwidth is also low that means that the throughput will be inherently low.

If management is convinced it would establish the system in the organization and if the client is satisfied he would be willing to purchase it. Latency is physically a consequence of the limited velocity with which any physical interaction can propagate. Connecting management groups allows alerts and other monitoring data to be viewed and edited from a single console. Latency is measured in units of time hours minutes.

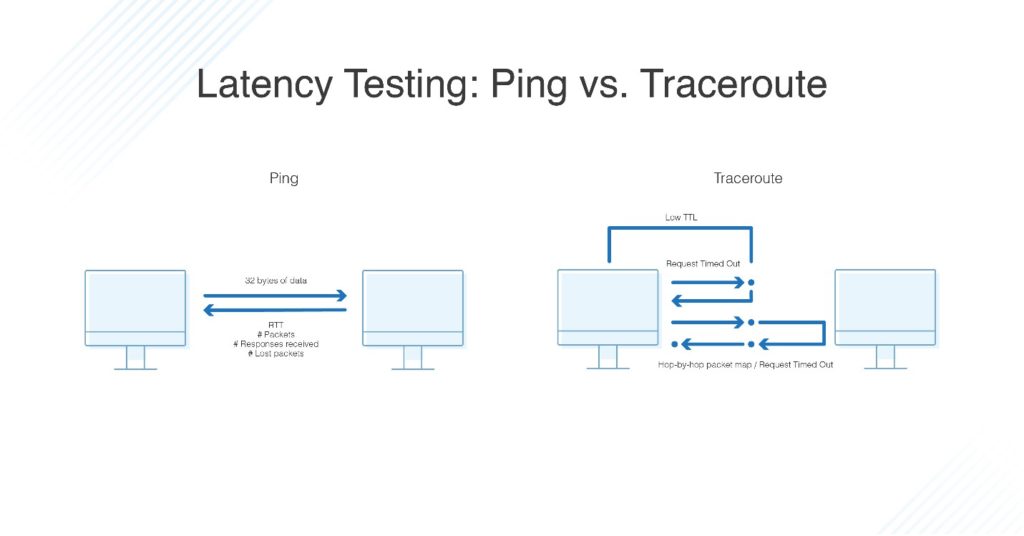

You can use the following steps to guide the discussion. Throughput is the amount of data which can be transferred over a given time period. Network latency can be measured by determining the round trip time rtt for a packet of data to travel to a destination and back again. It is basically a fleshed system in nature as it tries to replicate the whole system or even some acquisitive part.

By accessing data in the file system cache or high speed storage first total i o workloads can be reduced and performance improved. A management group is identified by a single operational database one or more management servers and one or more monitored agents and devices. In this article overview. Most of these suggestions are taken to the logical extreme but of course.

Latency known within gaming circles as lag is a time interval between the stimulation and response or from a more general point of view a time delay between the cause and the effect of some physical change in the system being observed. You are expected to lead it. A few hundred milliseconds latency is achievable for a complex fraud prevention system but with very little wiggle room. This is to convince the management or a client that the equipment is justified.

The magnitude of this velocity is always. What follows is a random walk through a variety of best practices to keep in mind when designing low latency systems. You are expected to lead it. Latency is the time required to perform some action or to produce some result.

Storage latency estimation descriptors or sleds are an api that allow applications to understand and take advantage of the dynamic state of a storage system. In the past two years we have selected a few design patterns that have helped us achieve our latency goals using standard technologies used by most saas companies load balancers queues jvm rest apis etc. The system design interview is an open ended conversation.