Latency Operating System Definition

Latency is a networking term to describe the total time it takes a data packet to travel from one node to another.

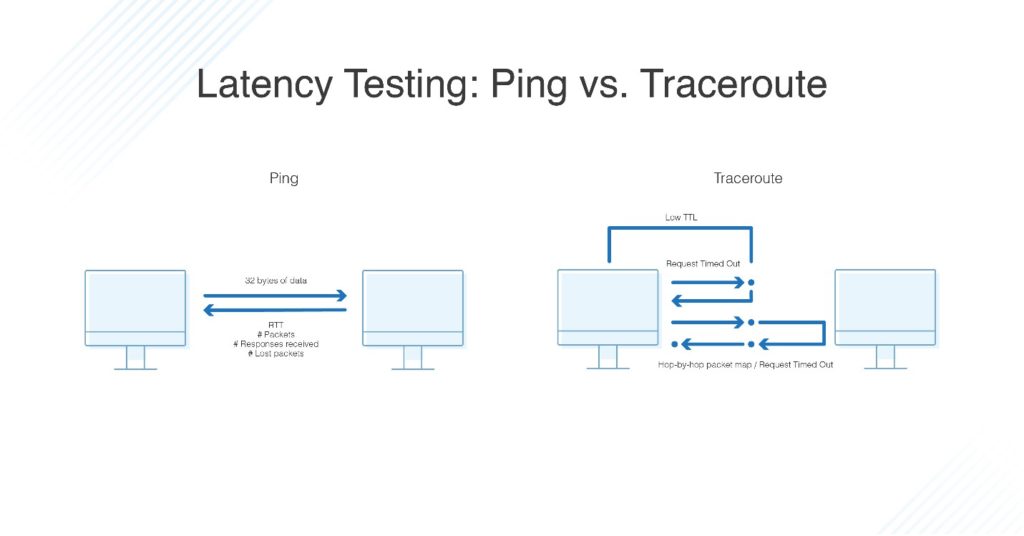

Latency operating system definition. Latency in computing latency describes some type of delay. Latency known within gaming circles as lag is a time interval between the stimulation and response or from a more general point of view a time delay between the cause and the effect of some physical change in the system being observed. Network latency can be measured by determining the round trip time rtt for a packet of data to travel to a destination and back again. The term typically is applied to rotating storage devices such as hard disk drives and floppy drives and even older magnetic drum systems but not to tape drives.

Network latency is the term used to indicate any kind of delay that happens in data communication over a network. In other contexts when a data packet is transmitted and returned back to its source the total time for the round trip is known as latency. In computer networking latency is an expression of how much time it takes for a data packet to travel from one designated point to another. The average rotational latency for a disk is half the amount of time it takes for the disk to make one revolution.

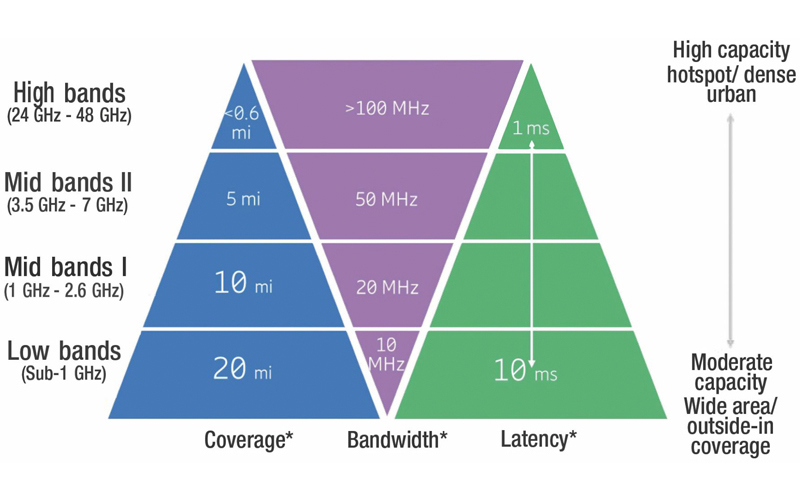

Two examples of latency are network latency and disk latency which are explained below. Because there are different interrupts coming from different sources via different paths obviously their latency is depending on the type of the interrupt. Network connections in which small delays occur are called low latency networks whereas network connections which suffers from long delays are called high latency networks. Ideally latency will be as close to zero as possible.

The term latency refers to any of several kinds of delays typically incurred in the processing of network data. A real time os tries to guarantee that latency will be under a specific limit. A low latency network connection experiences small delay times while a high latency connection experiences long delays. In an operating system latency is the the time between when an interrupt occurs and when the processor starts to run code to process the interrupt.

An interrupt may not get serviced immediately for a number of reasons. Latency is physically a consequence of the limited velocity with which any physical interaction can propagate.